背景介绍

由Datawhale组织的「一周算法实践」活动,通过短期实践一个比较完整的数据挖掘项目,迅速了解相关实际过程

GitHub Link

GitHub - Datawhale Datamining Practice - awyd234

任务描述

【任务2 - 模型评估】记录7个模型(在Task1的基础上)关于accuracy、precision,recall和F1-score、auc值的评分表格,并画出Roc曲线。

评价指标

对二分类问题常用的评估指标是精度(precision)、召回率(recall)、F1值(F1-score),通常以关注的类为正类,其他类为负类

实践过程

画ROC曲线需要用到matplotlib

1 | pip install -i install -i https://pypi.doubanio.com/simple/ matplotlib |

参考004-Sm1les同学博客的写法,将训练过程和评价方法单独定义了一个函数

1 | from sklearn import metrics |

生成结果

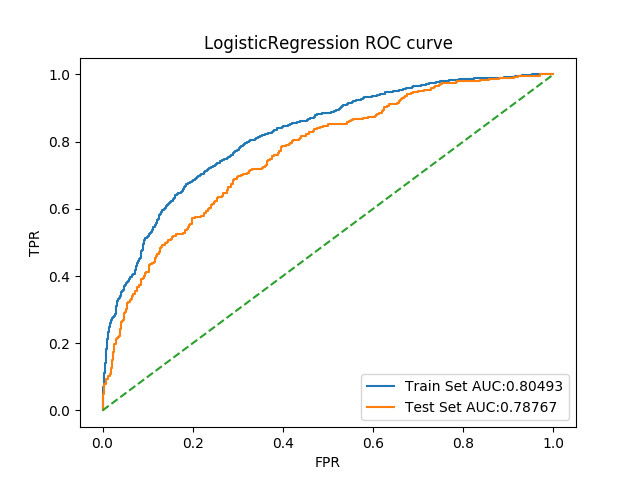

1. 逻辑回归

1.1 代码实现

1 | lr = LogisticRegression(random_state=2018) |

1.2 评价指标结果

| Evaluation | Train Set | Test Set |

|---|---|---|

| Accuracy | 0.8049293657950105 | 0.7876664330763841 |

| Precision | 0.7069351230425056 | 0.6609195402298851 |

| F1-score | 0.4933645589383294 | 0.4315196998123827 |

| Recall | 0.37889688249400477 | 0.3203342618384401 |

| Auc | 0.8198240444948494 | 0.7657454644090431 |

1.3 ROC曲线

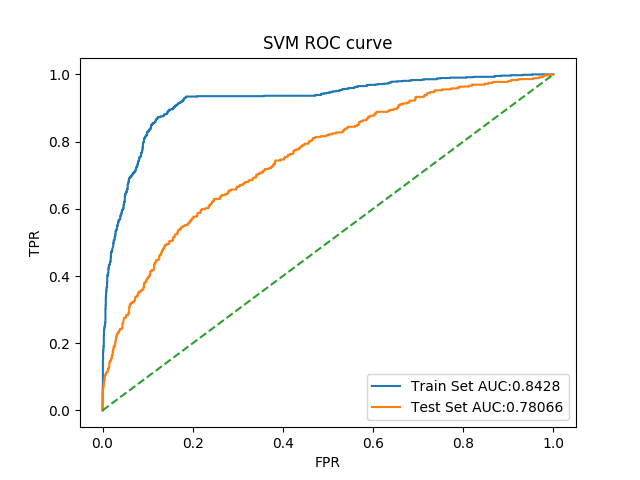

2. SVM

2.1 代码实现

1 | svm_clf = SVC(random_state=2018, probability=True) |

2.2 评价指标结果

| Evaluation | Train Set | Test Set |

|---|---|---|

| Accuracy | 0.8428013225127743 | 0.7806587245970568 |

| Precision | 0.9146666666666666 | 0.7017543859649122 |

| F1-score | 0.5674110835401158 | 0.3382663847780127 |

| Recall | 0.41127098321342925 | 0.22284122562674094 |

| Auc | 0.9217853154299663 | 0.7531297924947575 |

2.3 ROC曲线

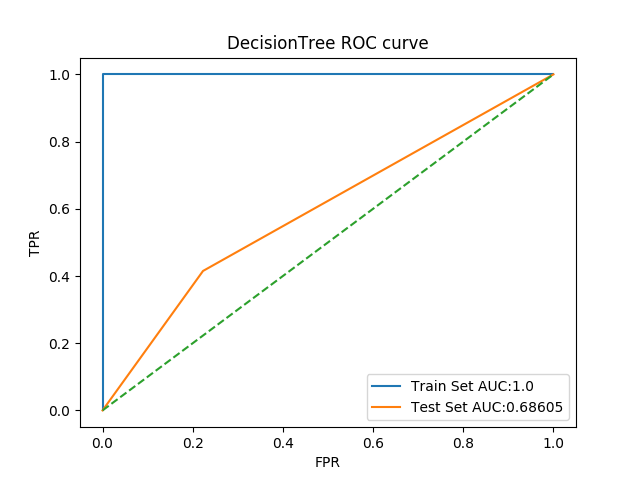

3. DecisionTree

3.1 代码实现

1 | dt_clf = tree.DecisionTreeClassifier(random_state=2018) |

3.2 评价指标结果

| Evaluation | Train Set | Test Set |

|---|---|---|

| Accuracy | 1.0 | 0.6860546601261388 |

| Precision | 1.0 | 0.3850129198966408 |

| F1-score | 1.0 | 0.39946380697050937 |

| Recall | 1.0 | 0.415041782729805 |

| Auc | 1.0 | 0.5960976703911197 |

3.3 ROC曲线

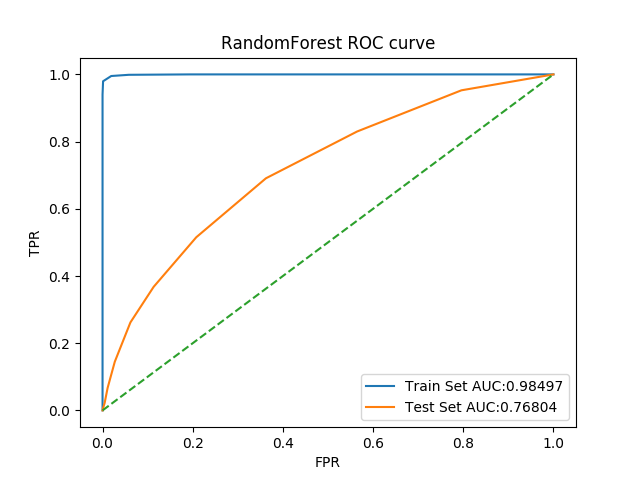

4. RandomForest

4.1 代码实现

1 | random_forest_clf = RandomForestClassifier(random_state=2018) |

4.2 评价指标结果

| Evaluation | Train Set | Test Set |

|---|---|---|

| Accuracy | 0.9849714457469192 | 0.7680448493342676 |

| Precision | 1.0 | 0.5875 |

| F1-score | 0.9690976514215082 | 0.3622350674373796 |

| Recall | 0.9400479616306955 | 0.2618384401114206 |

| Auc | 0.9995264919231882 | 0.7194427926095167 |

4.3 ROC曲线

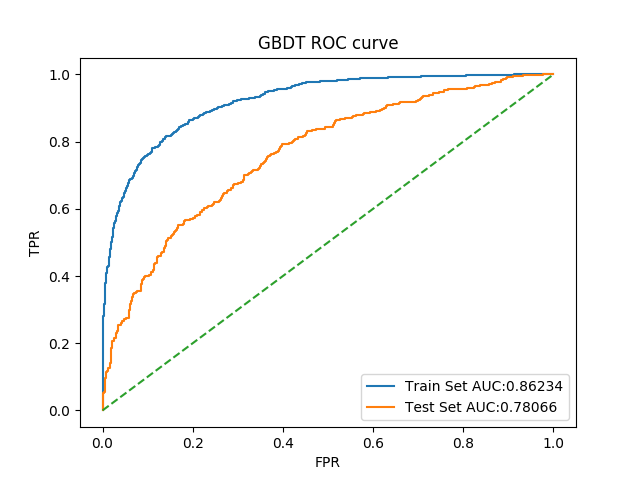

5. GBDT

5.1 代码实现

1 | gbdt_clf = GradientBoostingClassifier(random_state=2018) |

5.2 评价指标结果

| Evaluation | Train Set | Test Set |

|---|---|---|

| Accuracy | 0.8623384430417794 | 0.7806587245970568 |

| Precision | 0.8836734693877552 | 0.6116504854368932 |

| F1-score | 0.6540785498489425 | 0.44601769911504424 |

| Recall | 0.5191846522781774 | 0.35097493036211697 |

| Auc | 0.9207464353427005 | 0.7632677120173599 |

5.3 ROC曲线

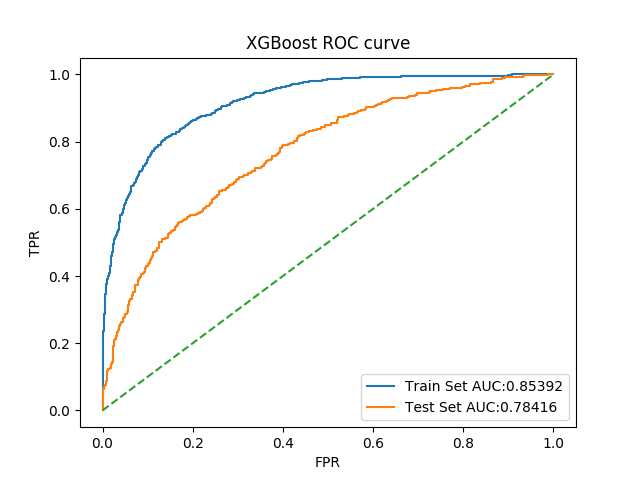

6. XGBoost

6.1 代码实现

1 | xgbt_clf = XGBClassifier(random_state=2018) |

6.2 评价指标结果

| Evaluation | Train Set | Test Set |

|---|---|---|

| Accuracy | 0.8539224526600541 | 0.7841625788367204 |

| Precision | 0.875 | 0.624390243902439 |

| F1-score | 0.6255778120184899 | 0.45390070921985815 |

| Recall | 0.486810551558753 | 0.3565459610027855 |

| Auc | 0.9174710772897927 | 0.7708522424963224 |

6.3 ROC曲线

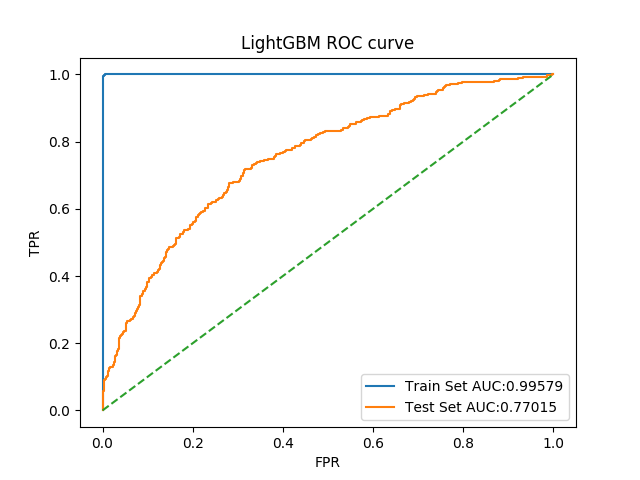

7. LightGBM

7.1 代码实现

1 | light_gbm_clf = LGBMClassifier(random_state=2018) |

7.2 评价指标结果

| Evaluation | Train Set | Test Set |

|---|---|---|

| Accuracy | 0.9957920048091373 | 0.7701471618780659 |

| Precision | 1.0 | 0.5688888888888889 |

| F1-score | 0.9915356711003627 | 0.4383561643835616 |

| Recall | 0.9832134292565947 | 0.3565459610027855 |

| Auc | 0.9999826853318788 | 0.7535210165566023 |

7.3 ROC曲线

遇到的问题

1. matplot的import问题

当尝试引入matplotlib时

1 | from matplotlib import pyplot as plt |

出现了以下错误

1 | Traceback (most recent call last): |

于是在网上搜到了一种解决方案,在自己的工作目录的.matplotlib目录里,新建了文件matplotlibrc并写入值backend: TkAgg

1 | echo 'backend: TkAgg' > ~/.matplotlib/matplotlibrc |

此时再次执行则可以正常进行

2. 逻辑回归和SVM中部分评价结果为0

据说,与数据未调参有关,导致两个分母都为0有关,可以暂时加上数据归一化

1 | from sklearn.preprocessing import StandardScaler |